安装twisted与scrape

pip install twisted

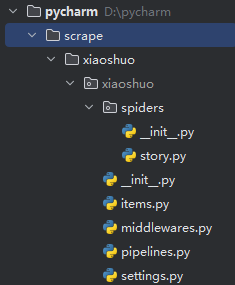

pip install scrapy自订目录,安装scrape配置文件

d:

cd \pycharm\scrape\xiaoshuo#自己建文件夹

scrapy crawl story进入pycharm,打开目录

编写story.py

import scrapy

class StorySpider(scrapy.Spider):

name = 'story' # 名称

allowed_domains = ['m.xbiqugew.com'] # 域名

start_urls = ['https://m.xbiqugew.com/book/53099/36995449.html'] # 开始的网址

def parse(self, response):

title = response.xpath('//h1[@class="nr_title"]/text()').get()

content = response.xpath('string(//div[@id="nr1"])').get()

next_url ='https://m.xbiqugew.com/book/53099/'+ response.xpath('//a[@id="pb_next"]/@href').get()

print(next_url)

paragraphs = content.split()

formatted_content = '\n'.join(paragraphs)

yield {

'title': title,

'content': formatted_content

}

yield scrapy.Request(next_url,callback=self.parse)

编写pipelines.py

class XiaoshuoPipeline:

def open_spider(self, spider):

self.file = open('万相之王.txt', 'w', encoding='utf-8')

def close_spider(self, spider):

self.file.close()

def process_item(self, item, spider):

# Correct string concatenation and reference to content field

title = item['title']

content = item['content']

# 检查内容中是否包含 "本章未完"

if "本章未完" in content:

# 保存当前标题和内容,等待下一部分

self.prev_title = title

self.prev_content = content

else:

# 如果之前有未完内容

if self.prev_content:

# 寻找第二部分内容的第一个字符在之前内容中的位置并裁剪

first_char = content[0]

last_index = self.prev_content.rfind(first_char)

if last_index != -1:

self.prev_content = self.prev_content[:last_index]

# 组合之前未完内容和当前内容

combined_content = self.prev_content + content

# 写入组合内容,并保留第一个标题,删除第二个标题

info = self.prev_title + "\n" + combined_content + '\n'

self.file.write(info)

# 清空未完内容

self.prev_title = None

self.prev_content = None

else:

# 写入当前标题和内容

info = title + "\n" + content + '\n'

self.file.write(info)

return item

注释setting.py

# Override the default request headers:

DEFAULT_REQUEST_HEADERS = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8",

"Accept-Language": "en",

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'

}

ITEM_PIPELINES = {

"xiaoshuo.pipelines.XiaoshuoPipeline": 300,

}

ROBOTSTXT_OBEY = True

DOWNLOAD_DELAY = 0.4

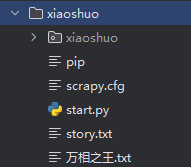

编写开始文件start.py,注意路径

from scrapy import cmdline

cmdline.execute(['scrapy', 'crawl', 'story'])