主函数:

import scrapy

from scrapy.spiders import CrawlSpider, Rule

from scrapy.linkextractors import LinkExtractor

from urllib.parse import urljoin

class NovelSpider(CrawlSpider):

name = 'novel_spider'

allowed_domains = ['piaotia.com']

start_urls = ['https://www.piaotia.com/booksort1/0/1.html']

rules = (

Rule(LinkExtractor(allow=r'booksort1/0/1.html'), callback='parse_start_page', follow=False),

)

def parse_start_page(self, response):

self.logger.info(f"Parsing start page: {response.url}")

book_links = response.xpath('//td[@class="odd"]/a/@href').extract()

for url in book_links:

full_url = urljoin(response.url, url)

self.logger.info(f"Requesting book URL: {full_url}")

yield scrapy.Request(url=full_url, callback=self.parse_book)

def parse_book(self, response):

self.logger.info(f"Parsing book details: {response.url}")

catalog_link = response.xpath('//caption/a/@href').extract_first()

book_name = response.xpath('//span/h1/text()').extract_first()

if catalog_link and book_name:

full_catalog_link = urljoin(response.url, catalog_link)

yield scrapy.Request(url=full_catalog_link,

callback=self.parse_catalog,

meta={'Book_name': book_name})

else:

self.logger.warning(f"Missing catalog link or book name for {response.url}")

def parse_catalog(self, response):

Book_name = response.meta['Book_name']

self.logger.info(f"Parsing catalog for book: {Book_name}")

base_catalog_url = urljoin(response.url, '.')

chapter_links = response.xpath('//li/a/@href').extract()

chapter_names = response.xpath('//li/a/text()').extract()

for link, name in zip(chapter_links, chapter_names):

full_link = urljoin(base_catalog_url, link)

self.logger.info(f"Found chapter: {name}, requesting: {full_link}")

yield scrapy.Request(url=full_link,

callback=self.parse_chapter,

meta={'Book_name': Book_name, 'Chapter_name': name})

def parse_chapter(self, response):

Book_name = response.meta['Book_name']

Chapter_name = response.meta['Chapter_name']

self.logger.info(f"Parsing chapter: {Chapter_name} of book: {Book_name}")

content_all = response.xpath('//body/text()').getall()

cleaned_content = [item.strip() for item in content_all if item.strip()]

title = response.xpath('//h1/text()').get()

if title:

title = title.strip()

else:

title = Chapter_name

content = '\n'.join(line for line in cleaned_content if line != title)

paragraphs = content.split('\n')

formatted_content = '\n'.join(paragraphs)

yield {

'chapter_name': title,

'content': formatted_content,

'book_name': Book_name

}

self.logger.info(f"Processed chapter: {Chapter_name} of book: {Book_name}")

pipeline函数:

import os

import logging

class NovelPipeline:

def __init__(self):

self.book_folder = 'books'

self.logger = logging.getLogger(__name__)

def open_spider(self, spider):

if not os.path.exists(self.book_folder):

os.makedirs(self.book_folder)

self.logger.info(f"Spider {spider.name} started.")

def close_spider(self, spider):

self.logger.info(f"Spider {spider.name} closed.")

def process_item(self, item, spider):

book_name = item.get('book_name')

chapter_name = item.get('chapter_name')

chapter_content = item.get('content')

if not book_name or not chapter_name or not chapter_content:

self.logger.warning(f"Missing fields in item: {item}")

return item

book_path = os.path.join(self.book_folder, book_name)

if not os.path.exists(book_path):

os.makedirs(book_path)

self.logger.info(f"Created directory for book: {book_name}")

file_name = f"{chapter_name}.txt"

file_path = os.path.join(book_path, file_name)

try:

with open(file_path, 'w', encoding='utf-8') as f:

f.write(chapter_content)

self.logger.info(f"Wrote chapter: {chapter_name} for book: {book_name}")

except IOError as e:

self.logger.error(f"Failed to write chapter: {chapter_name} for book: {book_name}. Error: {e}")

return item

setting函数:

# Scrapy settings for source project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = "source"

SPIDER_MODULES = ["source.spiders"]

NEWSPIDER_MODULE = "source.spiders"

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = "source (+http://www.yourdomain.com)"

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

# Configure maximum concurrent requests performed by Scrapy (default: 16)

# CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# # See also autothrottle settings and docs

# DOWNLOAD_DELAY = 0.5

# The download delay setting will honor only one of:

# CONCURRENT_REQUESTS_PER_DOMAIN = 5

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

DEFAULT_REQUEST_HEADERS = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8",

"Accept-Language": "en",

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'

}

# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# "source.middlewares.SourceSpiderMiddleware": 543,

#}

LOG_LEVEL = 'INFO'

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

DOWNLOADER_MIDDLEWARES = {

"source.middlewares.RandomUserAgentMiddleware": 543

}

# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# "scrapy.extensions.telnet.TelnetConsole": None,

#}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'source.pipelines.NovelPipeline': 300

# 'source.pipelines.TxtPipeline': 300

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = "httpcache"

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = "scrapy.extensions.httpcache.FilesystemCacheStorage"

# Set settings whose default value is deprecated to a future-proof value

REQUEST_FINGERPRINTER_IMPLEMENTATION = "2.7"

TWISTED_REACTOR = "twisted.internet.asyncioreactor.AsyncioSelectorReactor"

FEED_EXPORT_ENCODING = "utf-8"

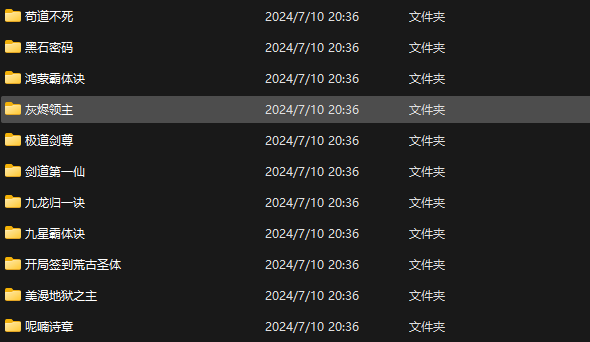

效果:

章节组合问题可以后续解决